As the community explores the new decision optimization horizon, we’ve made the NVIDIA cuOpt GPU-accelerated solver available on the Nextmv Platform. Nextmv streamlines the process of getting up and running with a cuOpt decision app complete with API endpoints, a UI, and tools for observability, experimentation, orchestration, and collaboration.

Earlier this year, NVIDIA open sourced the NVIDIA cuOpt decision engine for solving MIPs, LPs, and VRPs. In light of the news, we all started to wonder what optimization could look like beyond CPU-based computing. Check out the video below for a Nextmv + NVIDIA cuOpt speedrun (featuring a 1,000-stop-100-vehicle VRP example) and read on for more details 👇👇👇👇

Nextmv DecisionOps + NVIDIA cuOpt

Nextmv is a DecisionOps platform that accelerates decision model development, testing, integration, and deployment. It serves as a unique speed and trust layer for decision optimization projects, helping teams go from prototype to production faster and with more confidence.

With support for a growing ecosystem of decision intelligence solutions and compute classes, we’re particularly excited to have NVIDIA’s offerings available on Nextmv. Let’s take a look at what this means through a few different lenses.

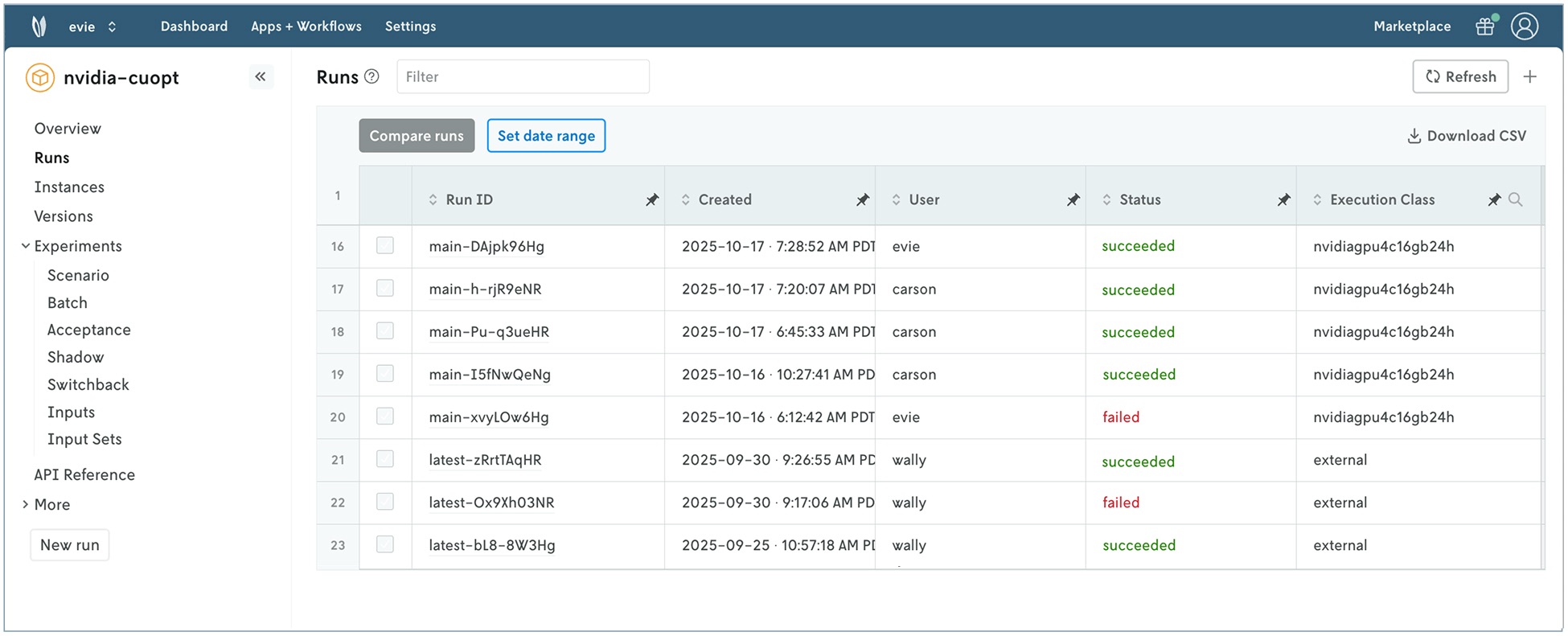

Observability: Know who did what, when, and with what I/O

When you connect a decision model to Nextmv, you have a system of record for all your runs (regardless of where they happen) in one place. This makes it easy to understand basic information like how many runs took place, did the run succeed or fail, and who made the runs.

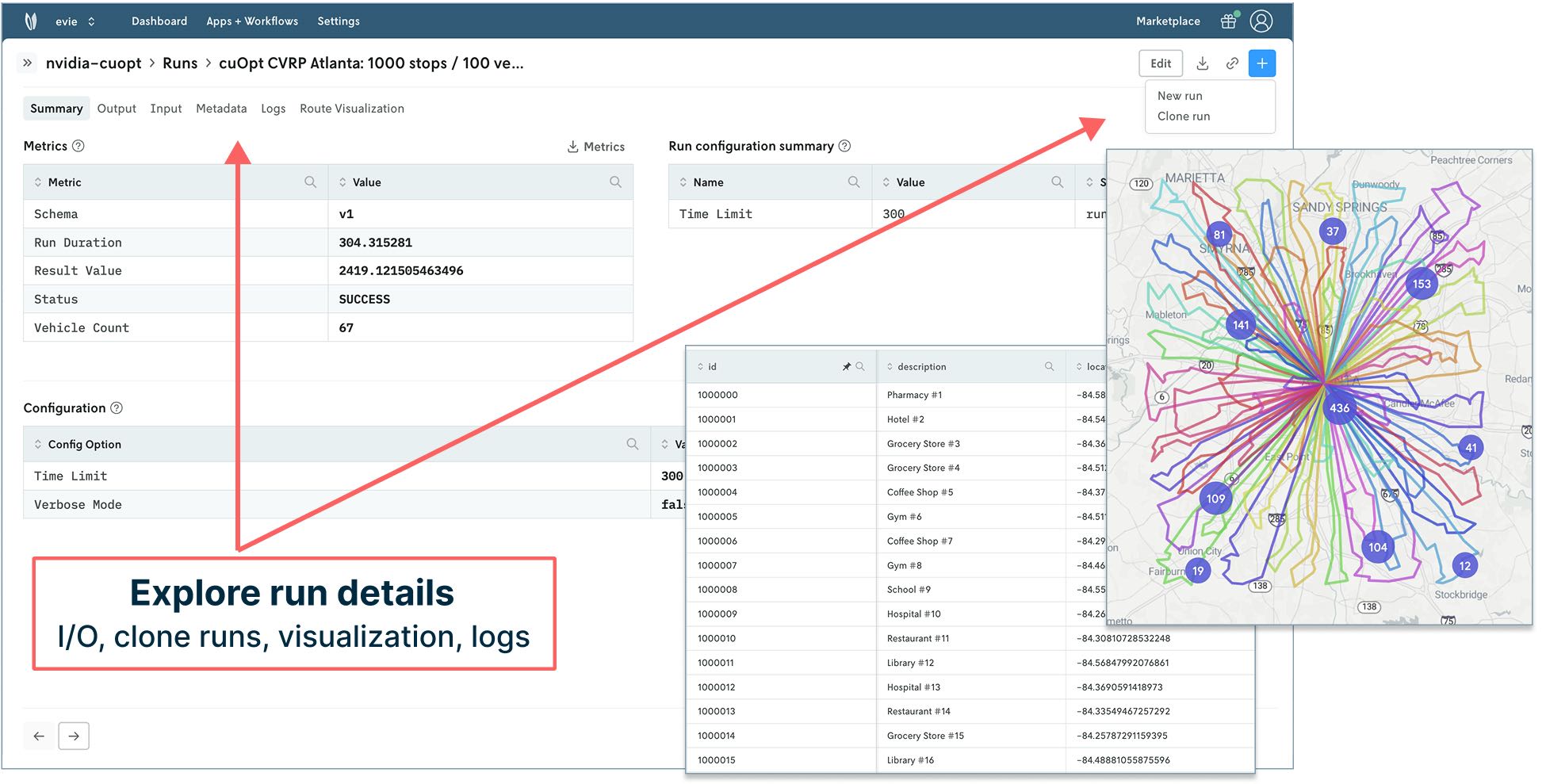

You can also dive deeper into run details to view input, output, model metrics you care about, logs, and managed visualizations. Even nicer: you can clone a run to reproduce it as-is or modify and re-run.

These capabilities sidestep the process of hunting down and piecing together different inputs from different sources, recreating the environment the run executed in, and working through the series of meetings required to troubleshoot, resolve, and move on from a given issue.

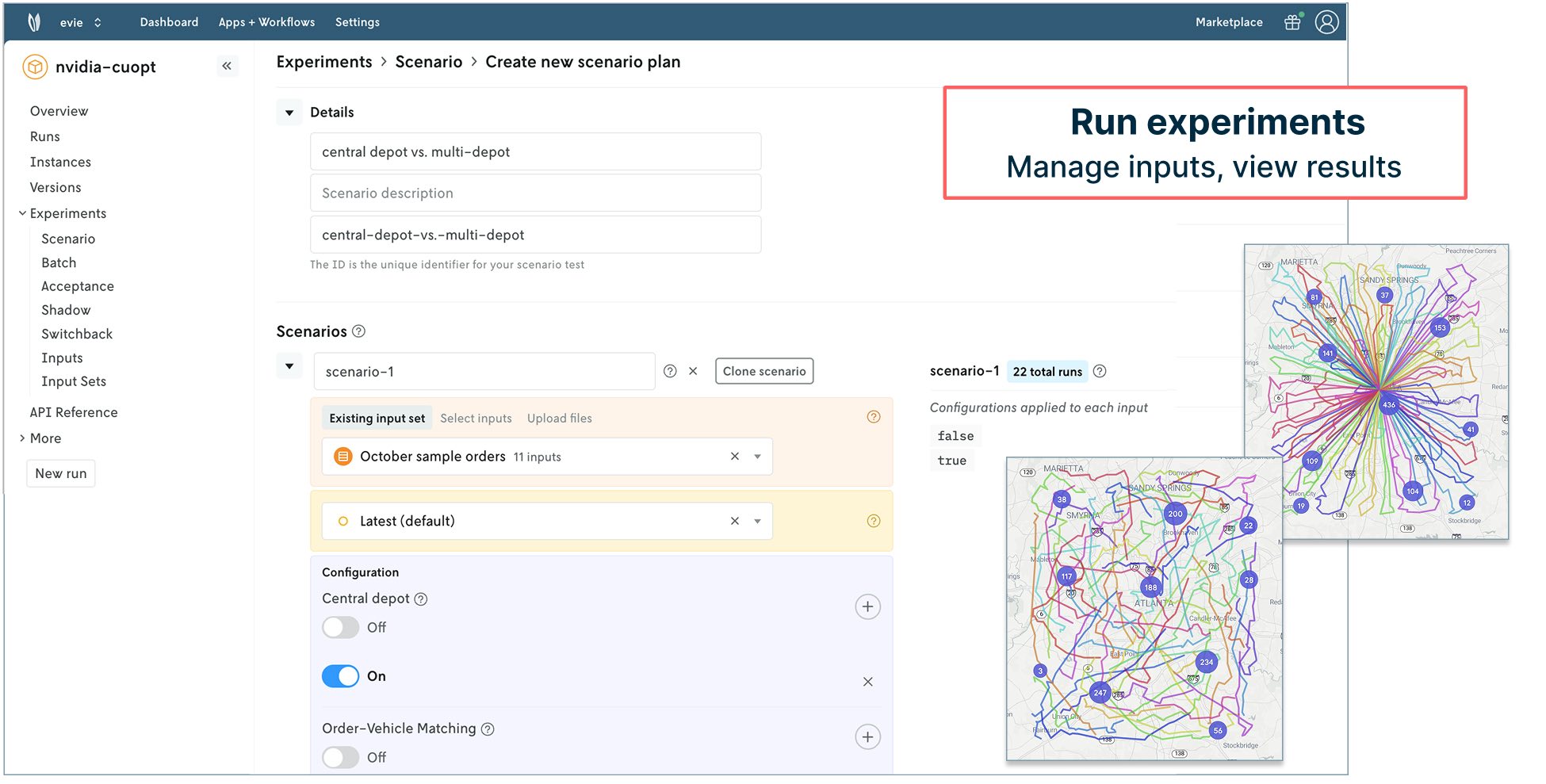

Experimentation: Reduce rollout risk and build confidence

A recent Decision Intelligence Lab podcast highlighted that half of any data science project should be dedicated to testing. I have lived and learned many lessons from having (or lacking) the right testing framework. Nextmv makes it easy to leverage a decision optimization test bench, with a suite of experimentation tools such as scenario tests, acceptance tests (that tie into CI/CD), shadow tests, switchback (A/B) tests, etc.

With each experiment you perform, Nextmv records the runs, metadata, and visualizations to reference, review, and share. There are also features for creating, managing, and sharing input sets, and you can expose options as UI components (sliders, switches, etc.) to make configuring tests even easier.

Orchestration: Scale with workflows that integrate with your stack

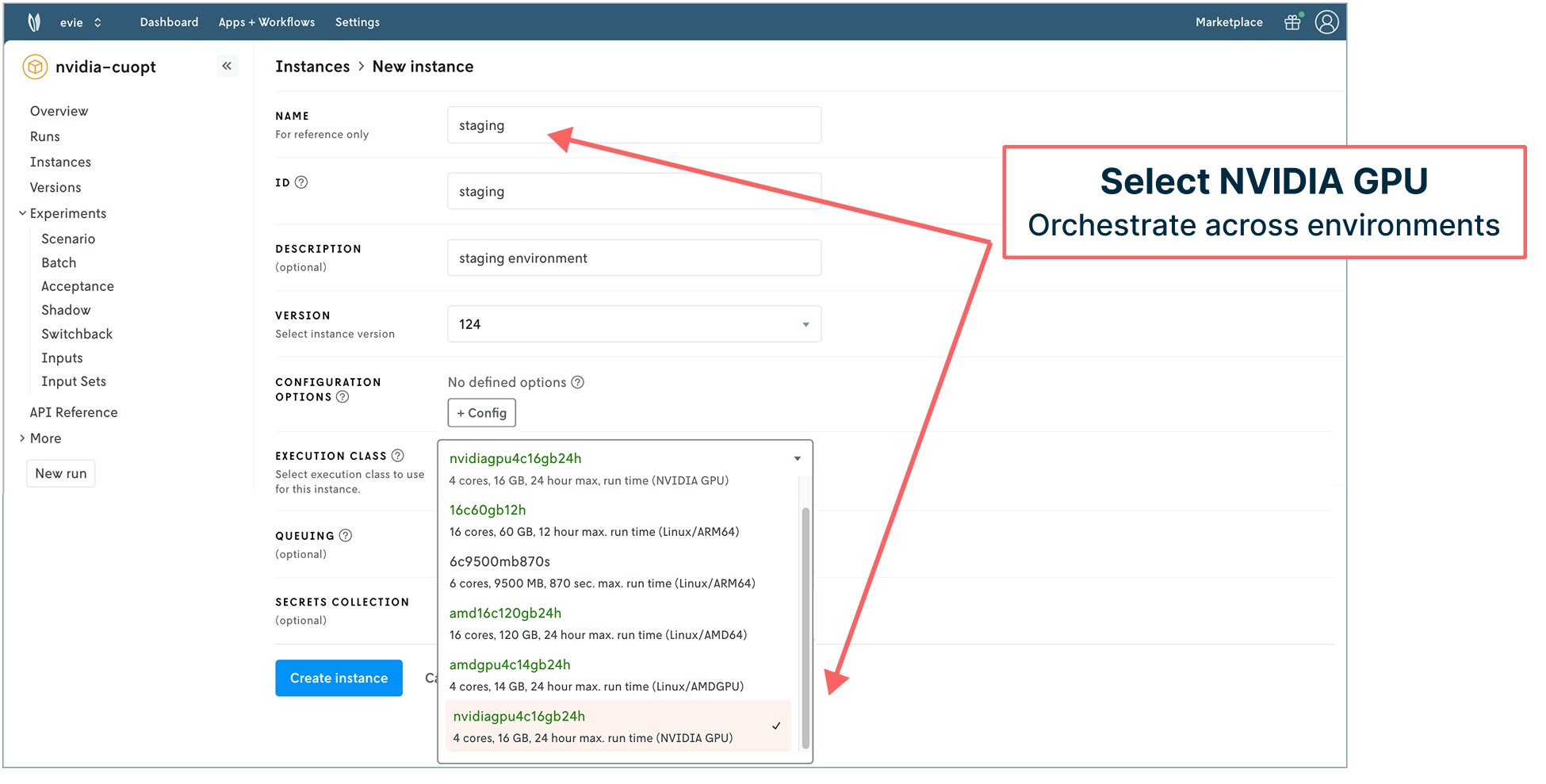

Nextmv provides optionality for different compute classes that are a dropdown away. Adding the NVIDIA GPU execution class on Nextmv takes what’s possible to a new level for running cuOpt-based decision apps as well as other CUDA-enabled applications.

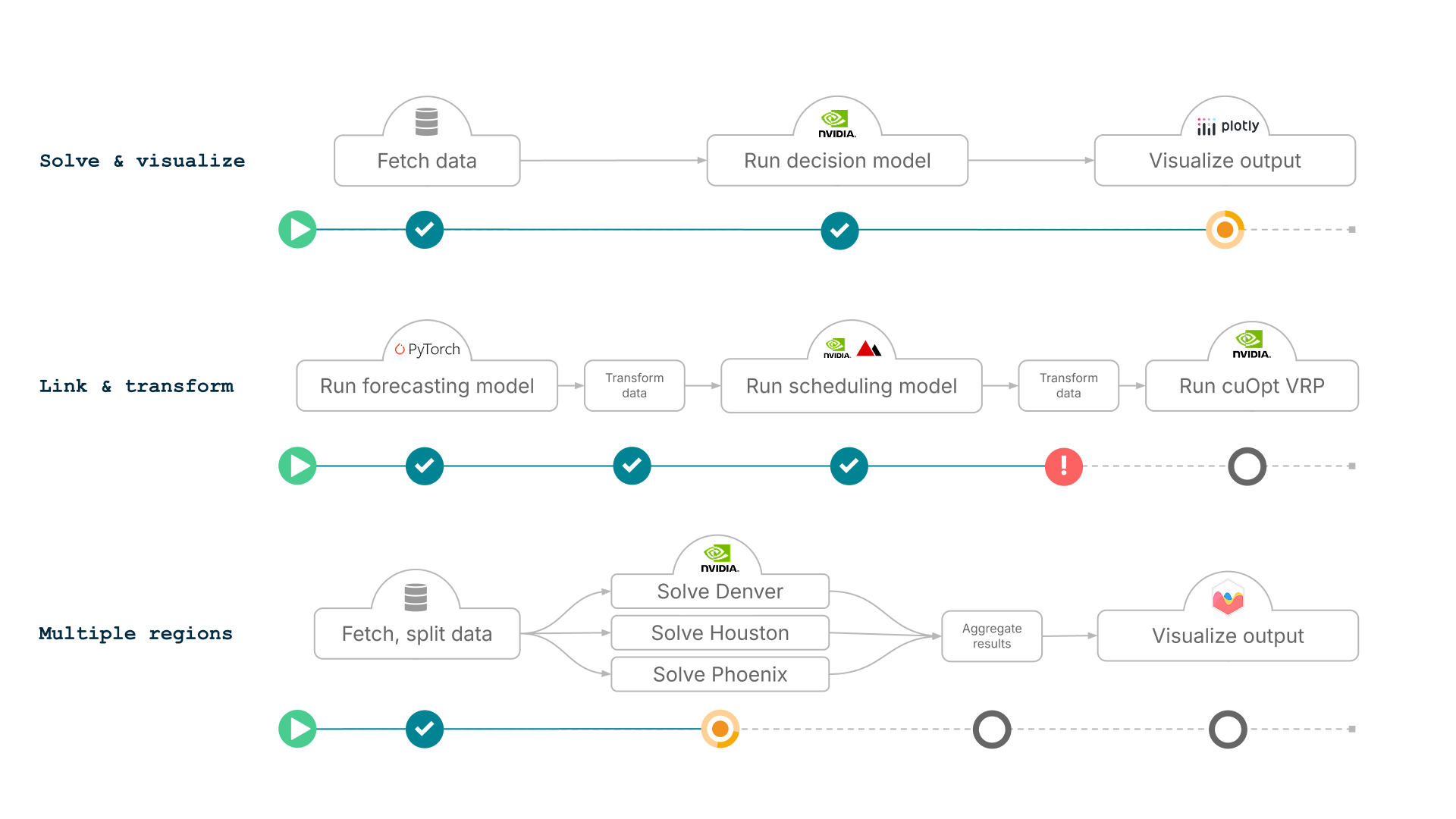

In addition to orchestrating compute, Nextmv provides tools for decision workflows that streamline automating (and auditing) a series of steps. This includes anything from fetching data to linking multiple apps, running multiple instances of an optimization, and aggregating and visualizing the results.

Collaboration: Deliver decision model value to stakeholders faster

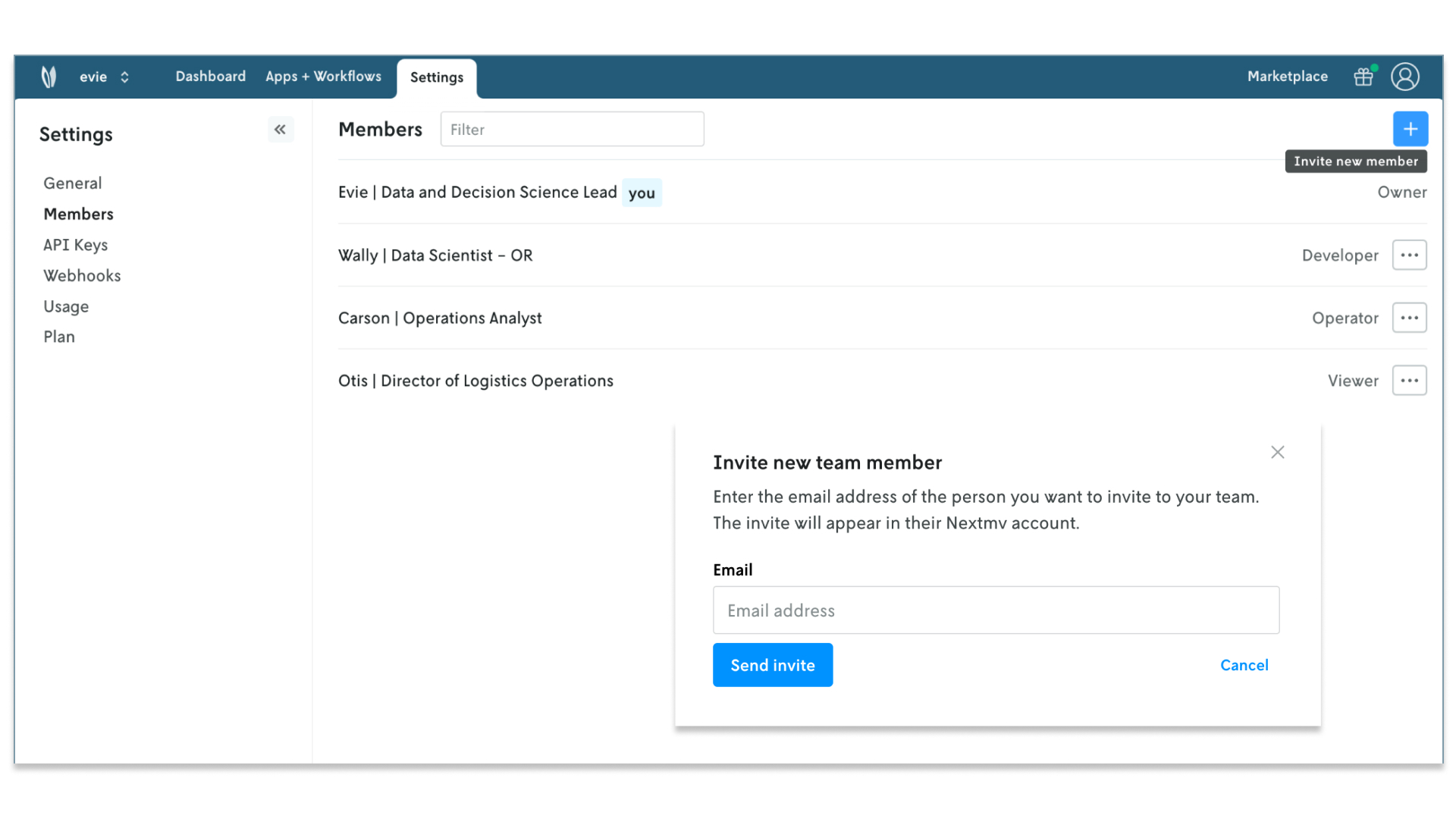

Operationalizing decision optimization is a team sport. Modelers design the algorithms. Software teams integrate and monitor them. Operators improve the business with model output. Nextmv makes it easy to invite stakeholders to a workspace and assign permissions that provide the right level of interaction.

Decision optimization is a powerful tool for generating plans in the face of complexity, time limits, scale, and other challenges. Having humans in the loop to explore and understand optimized plans is an integral part of the process to ensure smooth operations.

How to get started with Nextmv + NVIDIA cuOpt

You can get started with the Nextmv + NVIDIA cuOpt experience via the NVIDIA cuOpt community app on GitHub by running a local GPU and then syncing runs to a free Nextmv account. When you sync local runs to Nextmv, you’ll see information appear in the Nextmv UI that’s useful for building out a system of record for tracking and organizing your model development.

When you’re ready to push your decision model code to Nextmv to perform experiments, manage versions and instances, and collaborate with teammates, using GPU-accelerated compute, send us a message.

There’s a grand opportunity before all of us in the optimization community to reimagine how decision algorithms work in a GPU context alongside the exciting work already happening in the space today. (I, for one, remain particularly excited about what it will look like to run optimization on NVIDIA’s latest GPU architecture.) Nextmv is proud to work with NVIDIA on this release and to be part of this bold next step in the decision optimization space.

May your solutions be ever improving 🖖