Welcome to the latest edition of our release roundup! Dive right into new features of the Nextmv platform.

Compare the output of decision models

Batch experiments

Measuring the potential business impact of changes to a decision model can be challenging from both a process and resource perspective. If you add a new constraint, will there be unintended side effects? Are metrics still trending in the right direction? Find (and share) the answers using batch experiments on the Nextmv platform. With our testing framework, there’s a smoother path to production and CI/CD for your decision models.

Get started by signing up for a free Nextmv account.

What is it?

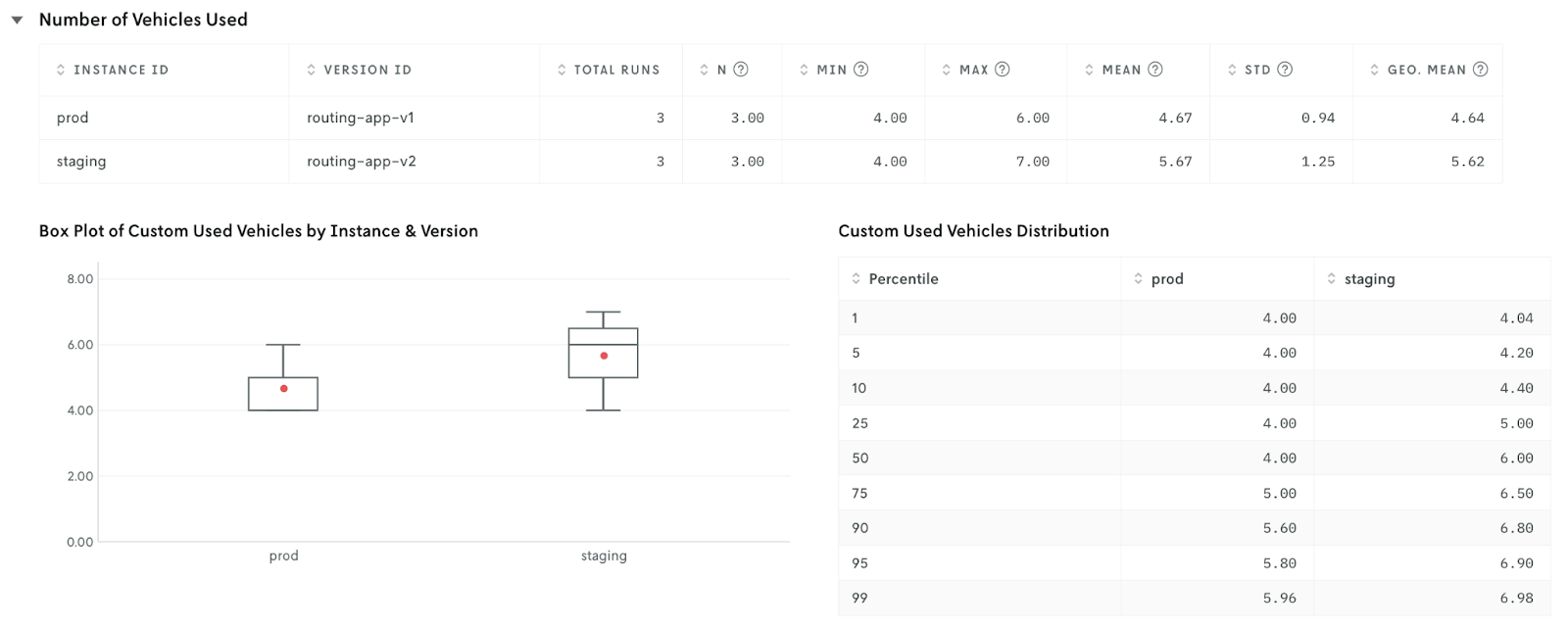

Batch experiments are tests that compare the output of one or more models using a consistent set of inputs. These experiments return the output metrics (including any custom metrics) and summary stats.

Why use it?

Batch experiments are often used as exploratory tests to understand the impacts of model updates on business KPIs. For example, imagine you work at a farm share company that picks up produce boxes from local farms and delivers them to customers’ homes. Drivers have been experiencing burnout as the business grows, so you want to understand the impact of creating driver shifts to keep their hours capped. You run a batch experiment using your current model in production (without shifts) vs your new model in staging (with shifts). The results allow you to compare output metrics like the solution value, number of unassigned stops, and number of vehicles used so you can make an informed decision on next steps.

In general, there are two things batch experiments help you do to keep moving your model forward to production.

The first is comparing output metrics from one or more models, including:

- Summary stats from the output of each model (e.g., solution value)

- Details and comparison of specific metrics or custom metrics (e.g., the number of vehicles used for route optimization)

The second is identifying impacted KPIs and deciding which actions to take, such as:

- Updating constraints (e.g., adding shift times to the model)

- Updating solver configuration (e.g., decreasing run time)

- Alerting stakeholders to the potential impacts of moving forward (e.g., including driver shifts will increase the number of unassigned stops if no additional changes are made to the model or config)

Stakeholders across an organization often have requirements that involve updating a model. An operator might see an opportunity to improve user experience. The finance team might need to adhere to a new annual budget. The data science team might want to improve solver performance.

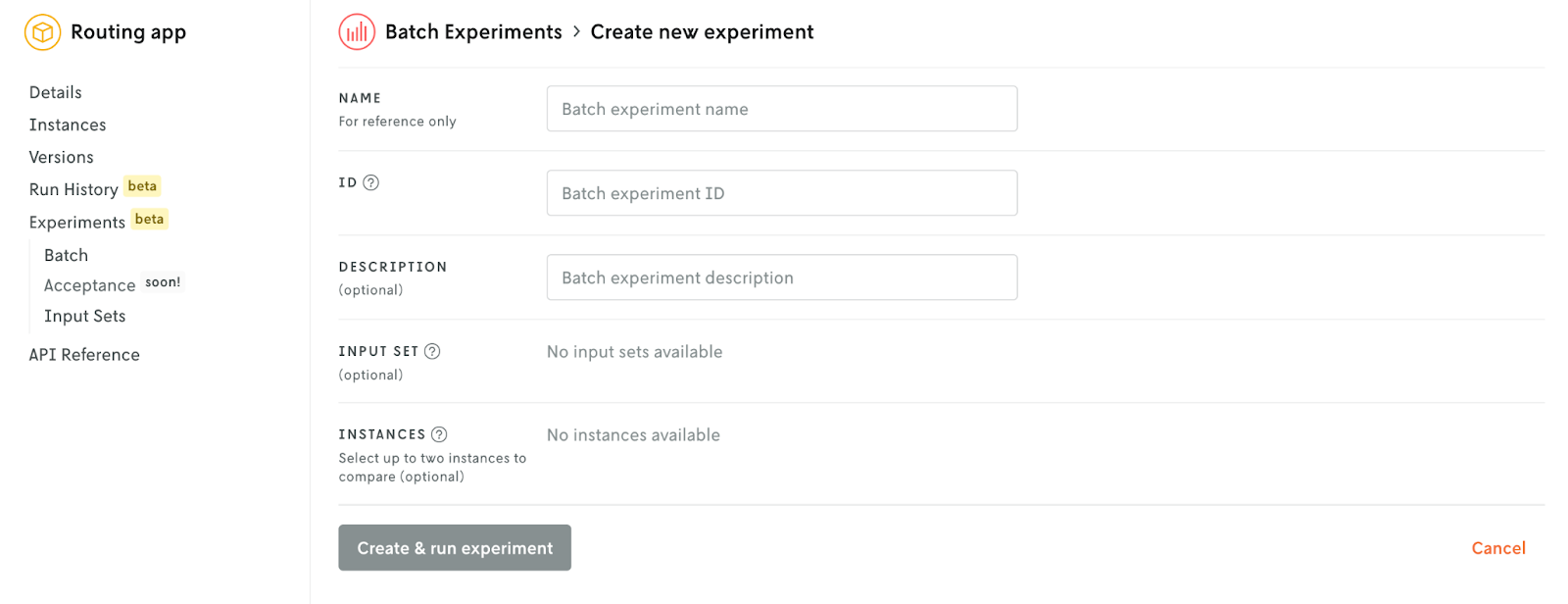

So what’s the next step to answer a question like, “Does the model in staging with new constraints have more unassigned stops than the model in production?” The answer can be found in the results of a batch experiment. Head to “Experiments” under the app you’re interested in testing, and create a new batch experiment directly in the console.

Better insight into decision app details

When you’re developing, testing, and deploying decision models, the more context you have, the better. A Nextmv decision app holds everything you need to solve a specific problem with your model, including the code itself, instances and versions, run history, experiments, and more. And you can share it all with your teammates by inviting them to a shared workspace in the Nextmv console. Let’s look at the latest features that make your apps more robust and allow for simpler collaboration.

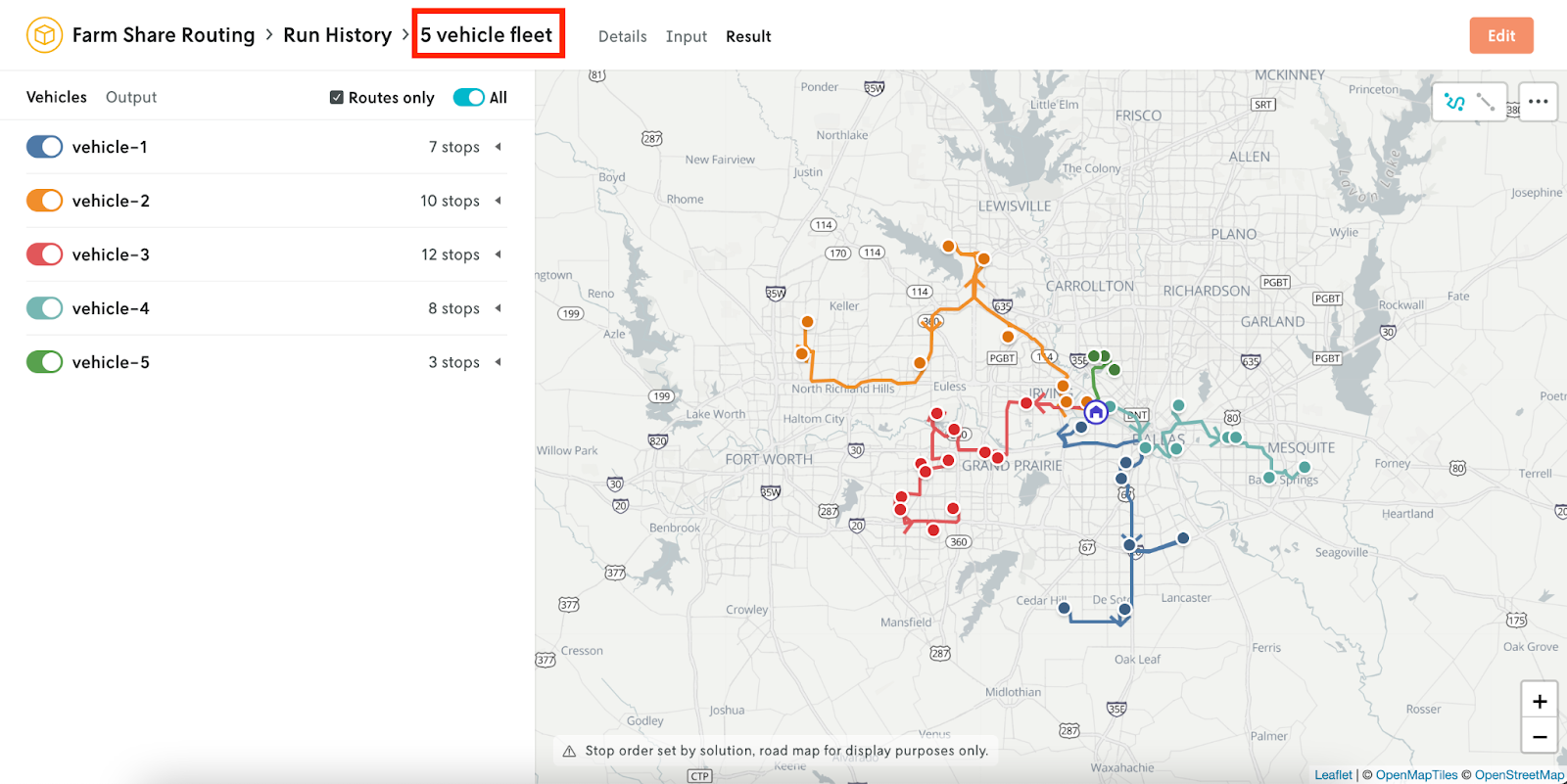

Name individual app runs

What is it?

Give descriptive names to specific runs and provide instant context for anyone reviewing the run history of your decision app – whether that’s future you or another team member.

Why use it?

Make it simple to identify runs by any variable or convention you’d like to make them meaningful.

Here are a few examples of when you may want to name a run after seeing the output:

- You perform a run and notice strange looking routes → You might name this one, “Z-shaped routes”

- You perform a run and it’s doing exactly what you’d hoped → Success! You might name this one, “Shorter service times, no unassigned stops”

- You perform a run that you want to be able to reference later → I want to come back to this run since it has a larger amount of stops. You might name this one, “100 stops”

What if you’d like to categorize the run regardless of the output? Here are a few examples of category names:

1. Type of input:

- Big vs small → “10 stops and 2 vehicles” vs “100 stops and 20 vehicles”

- Constraints included → “Included driver shifts”

- Golden file → “Routing standard input”

2. Project/experiment name → “Mixed fleet - run 1”

3. Production vs staging vs dev → “Dev run 1”

4. Team performing the fun → “OR team - run 1”

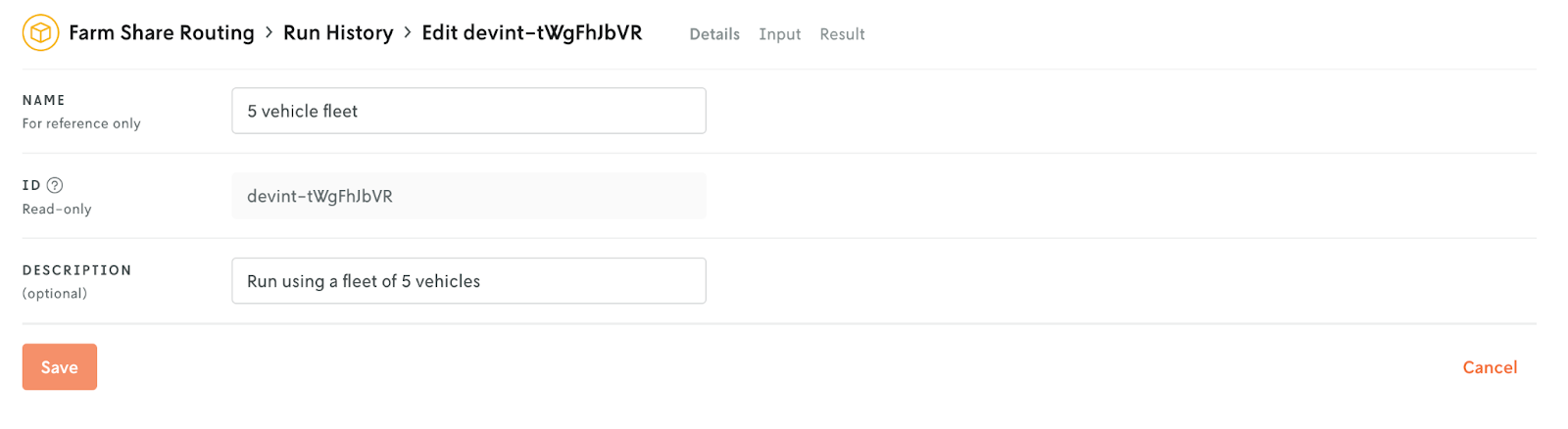

There are two ways to name your runs. You can create a name as you prepare to execute the run (directly in the Nextmv CLI or via the API) or you can edit the name in the Nextmv console after you’ve performed the run.

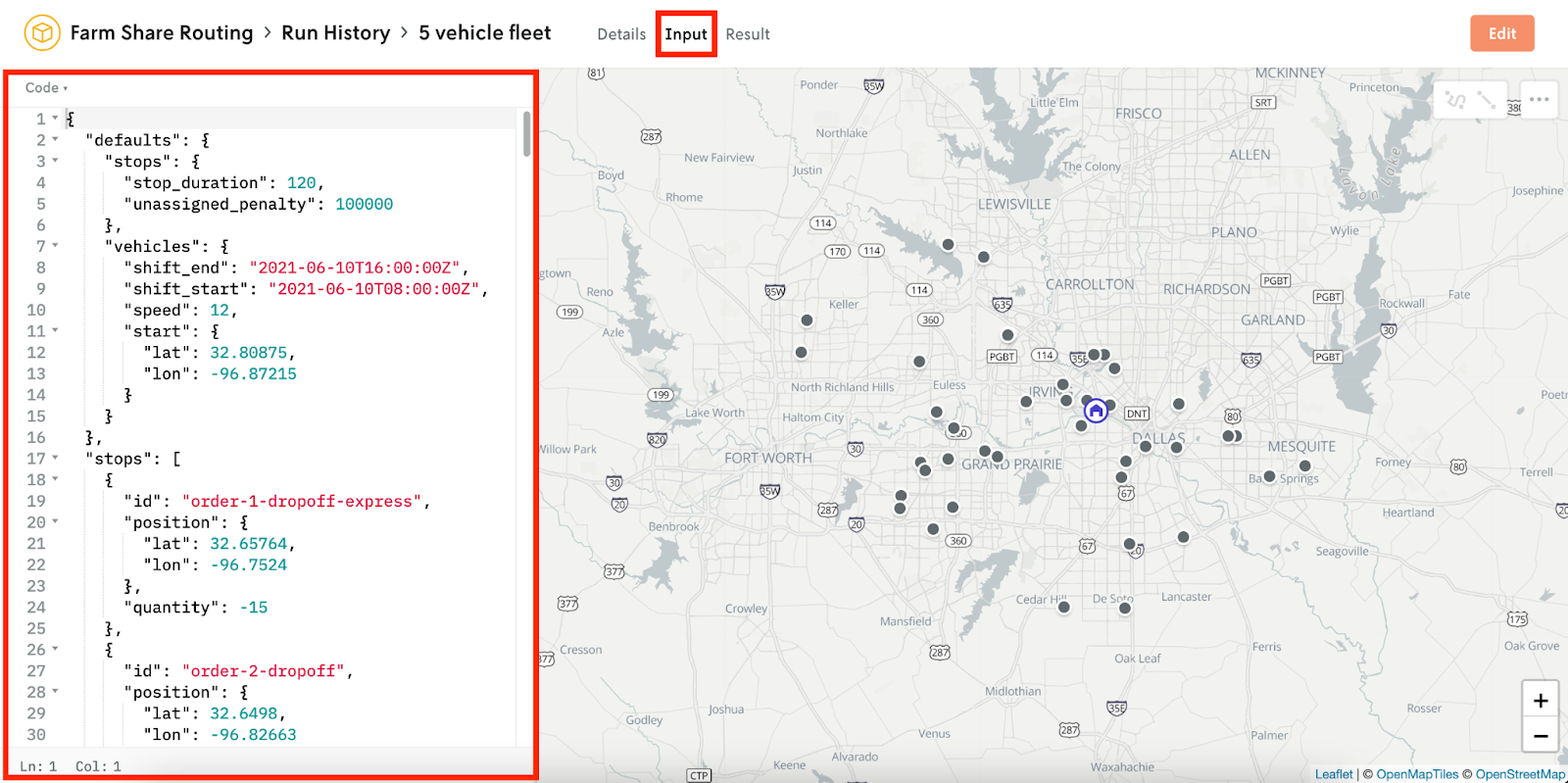

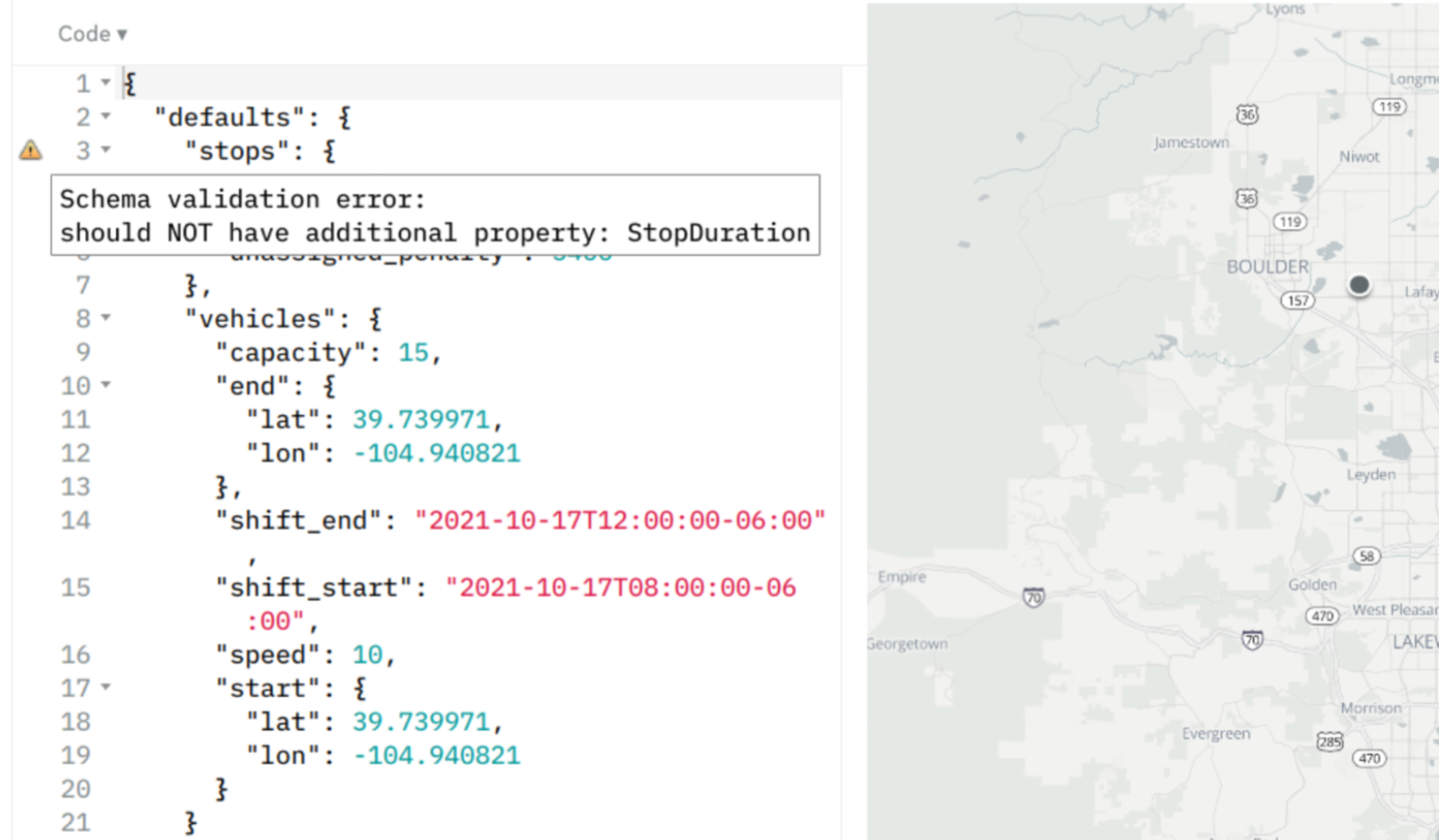

See the input of each run

What is it?

Run details now include the input used for every run. After a run is performed, the input is accessible to view in the Nextmv console. Click into the run history of your app, choose the run you’re interested in reviewing, and then select “Input” at the top.

Why use it?

Get the complete picture of a run – starting from the input. Let’s revisit our farm share company example. Why might you want to look at the input?

- You notice in the run history that a run failed → The run with an additional 10 farm pickups failed. Is there a schema issue?

- The output isn’t what you were expecting → Is there an issue with the capacity of the vehicles we’re using to pick up produce?

Filter, sort & search

What is it?

The tables that display the run history of your app allow you to filter by terms, sort by dates, and search across any field.

Why use it?

Like any other app that’s critical to your business, investigation, and exploration should be straightforward. Find what you need without changing context.

When might you want to dive into the table of app run history?

- Search by the name of the run

- Sort by date and time

- Filter by the instance or version ID

- View only the runs that failed

Explore more functionality with sample apps

What is it?

The Nextmv console is pre-populated with sample apps that are complete with runs to expand and experiments to analyze.

Why use it?

The two apps, Farm Share Routing and Farm Share Scheduling, demonstrate the type of app management and experimentation that you can take advantage of for your own use cases.

Start using the features today

Ready to see it all for yourself? Trying out these features is as easy as signing up for a free Nextmv account.

Have questions? Reach out to us anytime, post a question on our forum, or check out our docs. We love chatting all things optimization, automation, and testing.